How To Embark On Developing Good Online Assessments

Last month’s article discussed the many benefits of computer-based assessments, but it ended with a caveat. Technology cannot make a poorly designed assessment better—it just scales a poorly designed assessment to more people. In fact, the hardest part of developing good online assessments often happens offline—developing assessments that are reliable, fair, valid, and transparent.

This month's article begins with that caveat. And while we can't lay out everything needed to develop good online or computer-based assessments, this article offers five guidelines for developing assessments that are fair, reliable, transparent, and valid, in other words, that are "good".

1. Remember The Before, During And After Of Assessment

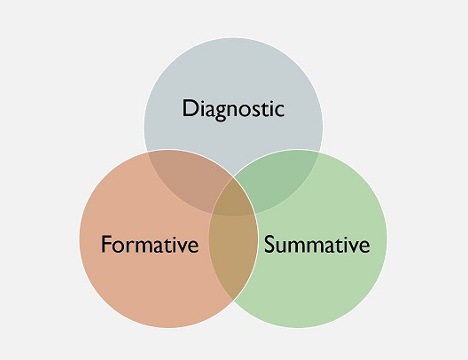

Figure 1: The "triple function" of assessment--assessing what learners know before, during and after the formal learning exercise.

The assessment doesn’t just happen after an online course or module or unit. It can (and should) happen before the learning, during the learning, and after the learning. I like to say that assessment has a triple function—it is diagnostic, formative, and summative—that help us assess where students are before, during, and after the learning. Figure 1 above illustrates the interconnectedness of these three types of assessments.

2. Know Why We Want To Assess

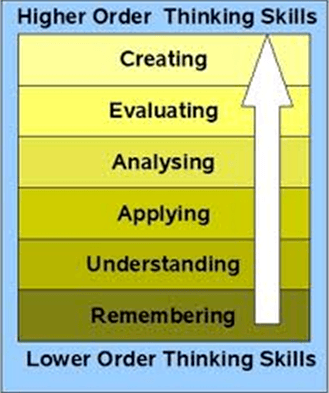

Assessments should really be about measuring learning outcomes. And learning outcomes should be about students demonstrating what they know, and more importantly, what they can do (skills). Learning outcomes can be low-level (recalling information) or high-level (analyzing information). A great, time-tested resource to help us understand the various levels of learning, which we can then assess, is Bloom’s Taxonomy. (See Figure 2)

Figure 2: Bloom's Taxonomy of Cognitive Domains of Learning. Source: Wikimedia Commons

3. Choose The Right Tool To Assess The Right Set Of Skills

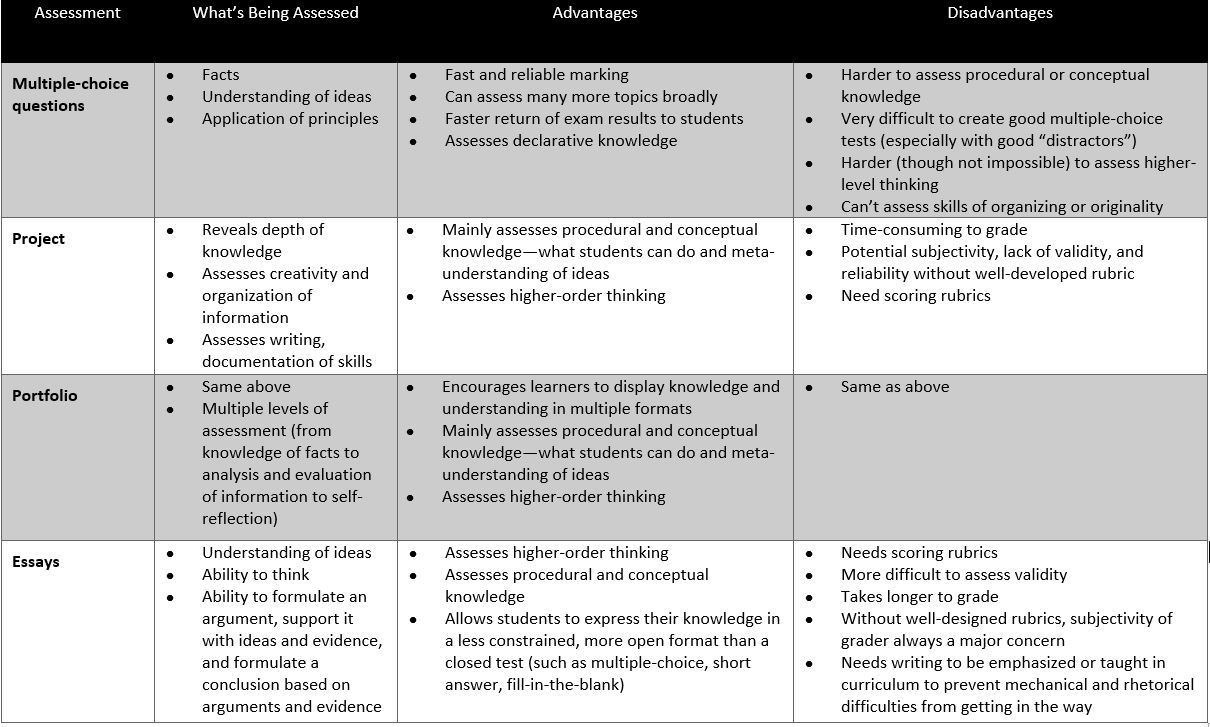

There are many different types of assessments, from tests to projects to performance-based tasks to essays, etc. Every one of these has a particular function and thus may be appropriate or inappropriate depending on what we want to assess. So choosing the right assessment tool or method is paramount. Figure 3 below may help us sort through common types of assessment tools or methods.

Figure 3: Common Types of Assessments and Their Advantages and Disadvantages (Adapted from Commonwealth of Learning & Asian Development Bank, 2008: 4–13, 4–14)

3. Remember, It’s Either "Open" Or "Closed"

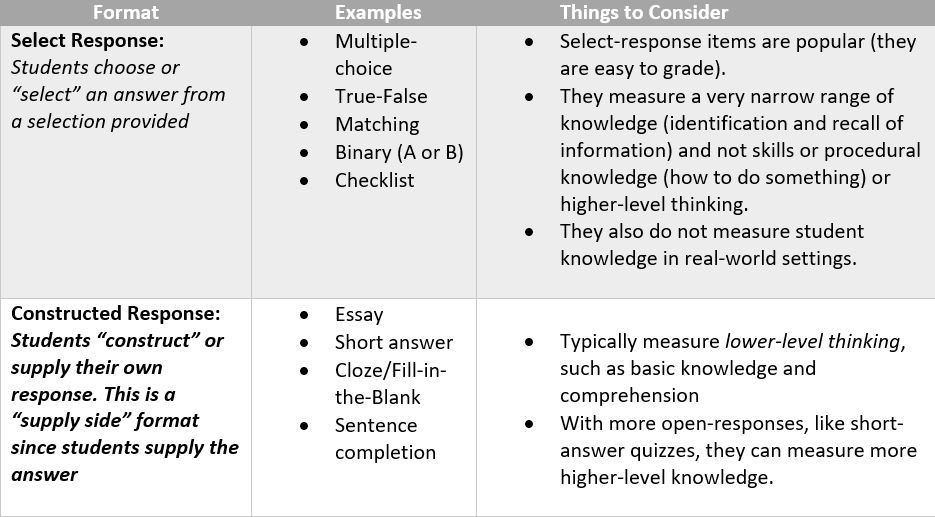

As Figure 4 shows, there are broadly two types of assessment methods—select response methods and constructed response methods.

With select response methods, our online learners "select" answers from a selection. True/False and Multiple Choice are the most common select response methods. Select-response items are good for recall/recognition of facts, limited types of reasoning. They are not good at all for assessing student skills.

With a constructed response method, our learners "construct" or "supply" their response. Constructed response items are good for descriptions/explanations. Simple constructed response items (fill-in-the-blank) still measure fairly low-level skills. However, more open constructed response assessments (like essays) can assess deeper knowledge and student thinking.

Learning Management System assessments and those of content development tools, like Articulate 360, do a far better job with select, versus constructed, response items.

Figure 4: Select vs. Constructed (or "Supply") Responses

4. Ok, Since We Are All Going To Create Multiple-Choice Tests Anyway, Listen Up…

We all love multiple-choice tests, and they are ubiquitous in online learning. They are easy to take, easy to score, and easy to create. Except they are not easy to create. They are actually hard to create well and most of us, unless we are psychometricians, don’t create them all that well. So, here are some guidelines for creating multiple-choice tests (which, hopefully, I will start following, too!)

- Make the stem clear, make it a question, and make it brief.

- For higher-level questions (e.g., focusing on analysis, synthesis or evaluation), the stem should have more information, but it should still be brief.

- Make responses direct, with no extra, meaningless information. The learner should never be able to guess the correct answer from the way the response is written.

- Don’t use "All of the above" or "None of the above". Those alternatives reward learners who don’t know the answers. If you MUST use "None of the Above", use with caution. Experts say if you use this option, it should only be correct 1 in 4 times.

- Make sure all of your answers—the correct answer and the distractors (the incorrect answers)—are consistent in length, style, etc. We don’t want students getting the right answer because it looks different from the wrong answers.

- Increase the plausibility of distractors by including extraneous information and choosing distractors based on common student errors. But remember, the point of distractors is to figure out where students have weaknesses—so we can address these errors in thinking.

- Pay attention to language. Avoid grammar, spelling, and mechanics errors which may make it difficult for students to even determine what possible responses are saying.

- Avoid categorical terms—always, never. There’s no such thing as "always" or "never", and this is a giveaway.

- Avoid double negatives (especially if Vladimir Putin is in your online course...).

- Distractors should illuminate common student misperceptions. We want to be able to differentiate among high level, medium level, and low-level performers. So, again, we really have to pay special attention to how we construct them.

- Use 4 possible responses, not 3. Three makes it more likely that students will guess the correct response

- Each question should stand alone. Don’t relate or connect questions. We want to avoid "double jeopardy" where if a learner answers one question incorrectly, another answer will also be incorrect.

Finally, remember that the point of a test is validity—figuring out what students really do know versus helping them "succeed" by "gaming" the system.

5. Benjamin Bloom Is Your Friend. Really.

Again, Bloom’s Taxonomy is a great resource for designing assessments. It gives us verbs matched with learning outcomes. If you don’t like Bloom’s Taxonomy, and many don’t (So 1950s...the "Mad Men" of learning taxonomies!) you can use other taxonomies by Marzano and others. Whatever you use, a taxonomy of learning outcomes with accompanying language will help scaffold the creation of your assessment.

Developing good online assessments is hard. We need to know why we are assessing. Assessments must be linked to learning outcomes and instructional activities. Assessments demand precise language, a good deal of revision, and need to be aligned with learning outcomes and instructional activities. Above all, we need to use the right assessment tool to measure the right skill. Without these practices, even the best technology will not save a poorly designed assessment.

References:

Commonwealth of Learning & Asian Development Bank (Eds.). (2008). Quality assurance in open and distance learning: A toolkit. Vancouver, BC: Commonwealth of Learning and Manila, Philippines: Asian Development Bank.